Flood Mapping Explained#

import pystac_client

from odc import stac as odc_stac

import xarray as xr

import numpy as np

Dask makes parallel computing easy by providing a familiar API common libraries, such as Pandas and Numpy. This allow efficient scaling of the here presented workflow for this adaptation of the TU Wien Bayesian flood mapping algorithm. The data size will be a main limiting factor as the data grows larger than RAM. For this reason we will partition our data in chunks which will presented to the machine workers by Dasks task scheduler in a most efficient manner. Although many of Dask’ settings can be handled automatically, we can also modify some parameters for optimal performance of the workflow for the desired processing environment. So, note, that this highly depends on your own machine’s specifications.

We can then set the Dask Client, where we avoid inter-worker communication which is common for working with numpy and xarray in this case.

from dask.distributed import Client, wait

client = Client(processes=False, threads_per_worker=2, n_workers=3, memory_limit="12GB")

client

Client

Client-af9fbf1c-0b3d-11f0-8873-00224804924a

| Connection method: Cluster object | Cluster type: distributed.LocalCluster |

| Dashboard: http://10.1.0.77:8787/status |

Cluster Info

LocalCluster

6e249b06

| Dashboard: http://10.1.0.77:8787/status | Workers: 3 |

| Total threads: 6 | Total memory: 33.53 GiB |

| Status: running | Using processes: False |

Scheduler Info

Scheduler

Scheduler-6fc2a1a4-f72d-474c-b5fa-19988a4758cb

| Comm: inproc://10.1.0.77/2163/1 | Workers: 3 |

| Dashboard: http://10.1.0.77:8787/status | Total threads: 6 |

| Started: Just now | Total memory: 33.53 GiB |

Workers

Worker: 0

| Comm: inproc://10.1.0.77/2163/4 | Total threads: 2 |

| Dashboard: http://10.1.0.77:41389/status | Memory: 11.18 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-scratch-space/worker-weia8y2u | |

Worker: 1

| Comm: inproc://10.1.0.77/2163/5 | Total threads: 2 |

| Dashboard: http://10.1.0.77:33977/status | Memory: 11.18 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-scratch-space/worker-yidsnwpj | |

Worker: 2

| Comm: inproc://10.1.0.77/2163/6 | Total threads: 2 |

| Dashboard: http://10.1.0.77:36769/status | Memory: 11.18 GiB |

| Nanny: None | |

| Local directory: /tmp/dask-scratch-space/worker-7ii0wpcx | |

In conjunction with setting up the Dask client qw will chunk the arrays along three dimensions according to the following specifications for maximum performance on my setup.

chunks = {"time": 1, "latitude": 1300, "longitude": 1300}

Cube Definitions#

The following generic specifications are used for presenting the data.

crs = "EPSG:4326" # Coordinate Reference System - World Geodetic System 1984 (WGS84) in this case

res = 0.00018 # 20 meter in degree

Northern Germany Flood#

Storm Babet hit the Denmark and Northern coast at the 20th of October 2023 Wikipedia. Here an area around Zingst at the Baltic coast of Northern Germany is selected as the study area.

time_range = "2022-10-11/2022-10-25"

minlon, maxlon = 12.3, 13.1

minlat, maxlat = 54.3, 54.6

bounding_box = [minlon, minlat, maxlon, maxlat]

EODC STAC Catalog#

The pystac_client establishes a connection to the EODC STAC Catalog. This results in a catalog object that can be used to discover collections and items hosted at EODC.

eodc_catalog = pystac_client.Client.open("https://stac.eodc.eu/api/v1")

Microwave Backscatter Measurements#

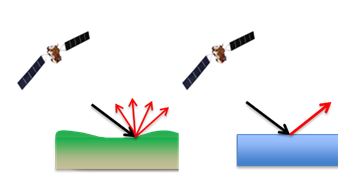

The basic premise of microwave-based backscattering can be seen in the sketch below, the characteristics of backscattering over land and water differ considerably. With this knowledge we can detect whenever a pixel with a predominant land like signature changes to a water like signature in the event of flooding.

Schematic backscattering over land and water. Image from Geological Survey Ireland

We discover Sentinel-1 microwave backscatter (\(\sigma_0\) [1]) at a 20 meter resolution, like so:

search = eodc_catalog.search(

collections="SENTINEL1_SIG0_20M",

bbox=bounding_box,

datetime=time_range,

)

items_sig0 = search.item_collection()

items_sig0

- type "FeatureCollection"

features[] 67 items

0

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221025T050931_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221025T050931_20221025T050956_045594_057398_2E62.zip"

- checksum "3bffc002f66bfa9f2185f8a034f12c19"

- datetime "2022-10-25T05:09:31Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 22

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221025T050931_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050931__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050931__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050931__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050931__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050931__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

1

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221025T050906_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221025T050906_20221025T050931_045594_057398_8EF9.zip"

- checksum "d856a1b69e686b8a244954bc85129335"

- datetime "2022-10-25T05:09:06Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VH"

- 1 "VV"

- sar:frequency_band "C"

- sat:relative_orbit 22

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221025T050906_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050906__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050906__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050906__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050906__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050906__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

2

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221025T050841_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221025T050841_20221025T050906_045594_057398_729B.zip"

- checksum "d40b1a2f3a80fa2ce4e5a64850e78976"

- datetime "2022-10-25T05:08:41Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 22

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221025T050841_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050841__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050841__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050841__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050841__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050841__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

3

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221025T050816_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221025T050816_20221025T050841_045594_057398_F203.zip"

- checksum "34a1da3f45199fac2a75d524617a73d1"

- datetime "2022-10-25T05:08:16Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 22

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221025T050816_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050816__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050816__VH_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050816__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050816__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221025T050816__VV_D022_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

4

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221024T171747_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 8.845755602

- 1 51.822339363

1[] 2 items

- 0 7.875111625

- 1 54.441998586

2[] 2 items

- 0 12.440351848

- 1 54.962810256

3[] 2 items

- 0 13.14982232

- 1 52.306556519

4[] 2 items

- 0 8.845755602

- 1 51.822339363

bbox[] 4 items

- 0 7.875111625004859

- 1 51.82233936342244

- 2 13.149822320324414

- 3 54.962810255948426

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221024T171747_20221024T171812_045587_057357_47CB.zip"

- checksum "9b2b41356f23b9ce406d49fe654dd8f6"

- datetime "2022-10-24T17:17:47Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 4800000

- 1 2100000

- 2 5100000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E048N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 4800000.0

- 1 2100000.0

1[] 2 items

- 0 4800000.0

- 1 2400000.0

2[] 2 items

- 0 5100000.0

- 1 2400000.0

3[] 2 items

- 0 5100000.0

- 1 2100000.0

4[] 2 items

- 0 4800000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 4800000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 15

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221024T171747_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171747__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171747__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171747__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171747__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171747__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

5

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221024T171722_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 8.845755602

- 1 51.822339363

1[] 2 items

- 0 7.875111625

- 1 54.441998586

2[] 2 items

- 0 12.440351848

- 1 54.962810256

3[] 2 items

- 0 13.14982232

- 1 52.306556519

4[] 2 items

- 0 8.845755602

- 1 51.822339363

bbox[] 4 items

- 0 7.875111625004859

- 1 51.82233936342244

- 2 13.149822320324414

- 3 54.962810255948426

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221024T171722_20221024T171747_045587_057357_CB91.zip"

- checksum "624be54be9545376ac5d60b509ca3554"

- datetime "2022-10-24T17:17:22Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 4800000

- 1 2100000

- 2 5100000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E048N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 4800000.0

- 1 2100000.0

1[] 2 items

- 0 4800000.0

- 1 2400000.0

2[] 2 items

- 0 5100000.0

- 1 2400000.0

3[] 2 items

- 0 5100000.0

- 1 2100000.0

4[] 2 items

- 0 4800000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 4800000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 15

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221024T171722_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171722__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171722__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171722__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171722__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171722__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

6

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221024T171657_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 8.845755602

- 1 51.822339363

1[] 2 items

- 0 7.875111625

- 1 54.441998586

2[] 2 items

- 0 12.440351848

- 1 54.962810256

3[] 2 items

- 0 13.14982232

- 1 52.306556519

4[] 2 items

- 0 8.845755602

- 1 51.822339363

bbox[] 4 items

- 0 7.875111625004859

- 1 51.82233936342244

- 2 13.149822320324414

- 3 54.962810255948426

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221024T171657_20221024T171722_045587_057357_BE92.zip"

- checksum "67cdd9693576e378135c05314532d98c"

- datetime "2022-10-24T17:16:57Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 4800000

- 1 2100000

- 2 5100000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E048N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 4800000.0

- 1 2100000.0

1[] 2 items

- 0 4800000.0

- 1 2400000.0

2[] 2 items

- 0 5100000.0

- 1 2400000.0

3[] 2 items

- 0 5100000.0

- 1 2100000.0

4[] 2 items

- 0 4800000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 4800000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VH"

- 1 "VV"

- sar:frequency_band "C"

- sat:relative_orbit 15

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221024T171657_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171657__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171657__VH_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171657__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171657__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221024T171657__VV_A015_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

7

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T163654_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T163654_20221023T163719_045572_0572CB_554F.zip"

- checksum "41506ac47eef97b5e7e431bf6baf6817"

- datetime "2022-10-23T16:36:54Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VH"

- 1 "VV"

- sar:frequency_band "C"

- sat:relative_orbit 175

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T163654_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163654__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163654__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163654__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163654__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163654__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

8

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T163629_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T163629_20221023T163654_045572_0572CB_2955.zip"

- checksum "4a15e841ec57e739bca9390e7eff5731"

- datetime "2022-10-23T16:36:29Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 175

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T163629_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163629__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163629__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163629__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163629__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163629__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

9

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T163604_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T163604_20221023T163629_045572_0572CB_2E8B.zip"

- checksum "f2ae24583af92a6825e4a921b208eb0e"

- datetime "2022-10-23T16:36:04Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "ascending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 175

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T163604_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163604__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163604__VH_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163604__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163604__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T163604__VV_A175_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

10

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T052551_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T052551_20221023T052616_045565_057294_F89D.zip"

- checksum "59695663bcc43dbd3d2cd7559a9078eb"

- datetime "2022-10-23T05:25:51Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VH"

- 1 "VV"

- sar:frequency_band "C"

- sat:relative_orbit 168

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T052551_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052551__VH_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052551__VH_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052551__VV_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052551__VV_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052551__VV_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

11

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T052551_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 8.845755602

- 1 51.822339363

1[] 2 items

- 0 7.875111625

- 1 54.441998586

2[] 2 items

- 0 12.440351848

- 1 54.962810256

3[] 2 items

- 0 13.14982232

- 1 52.306556519

4[] 2 items

- 0 8.845755602

- 1 51.822339363

bbox[] 4 items

- 0 7.875111625004859

- 1 51.82233936342244

- 2 13.149822320324414

- 3 54.962810255948426

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T052551_20221023T052616_045565_057294_F89D.zip"

- checksum "0bc086f3b2c575c1f6a68b88353880db"

- datetime "2022-10-23T05:25:51Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 4800000

- 1 2100000

- 2 5100000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E048N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 4800000.0

- 1 2100000.0

1[] 2 items

- 0 4800000.0

- 1 2400000.0

2[] 2 items

- 0 5100000.0

- 1 2400000.0

3[] 2 items

- 0 5100000.0

- 1 2100000.0

4[] 2 items

- 0 4800000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 4800000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 168

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T052551_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221023T052551__VH_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221023T052551__VH_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

VV

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221023T052551__VV_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VV Polarization"

- description "The Sigma Nought backscatter in VV polarization"

eo:bands[] 1 items

0

- name "VV"

- description "Sigma Nought in VV polarization"

alternate

local

- href "/eodc/products/eodc.eu/S1_CSAR_IWGRDH/SIG0/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221023T052551__VV_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "Local file path of the asset."

raster:bands[] 1 items

0

- scale 10

- nodata -9999

- offset 0

- data_type "int16"

- spatial_resolution 20

thumbnail

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E048N021T3/SIG0_20221023T052551__VV_D168_E048N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif/thumbnail"

- type "image/png"

- title "Preview Image"

- description "thumbnail image of asset VV"

roles[] 1 items

- 0 "thumbnail"

- collection "SENTINEL1_SIG0_20M"

12

- type "Feature"

- stac_version "1.0.0"

stac_extensions[] 4 items

- 0 "https://stac-extensions.github.io/sat/v1.0.0/schema.json"

- 1 "https://stac-extensions.github.io/sar/v1.0.0/schema.json"

- 2 "https://stac-extensions.github.io/projection/v1.1.0/schema.json"

- 3 "https://stac-extensions.github.io/raster/v1.1.0/schema.json"

- id "SIG0_20221023T052526_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 13.14982232

- 1 52.306556519

1[] 2 items

- 0 12.440351848

- 1 54.962810256

2[] 2 items

- 0 17.104148268

- 1 55.311871657

3[] 2 items

- 0 17.53297656

- 1 52.630546184

4[] 2 items

- 0 13.14982232

- 1 52.306556519

bbox[] 4 items

- 0 12.440351847963198

- 1 52.30655651888066

- 2 17.532976559623787

- 3 55.31187165740993

properties

- gsd 20

- parent "S1A_IW_GRDH_1SDV_20221023T052526_20221023T052551_045565_057294_42B7.zip"

- checksum "9f64f44a8ec92b1354ddcfaf48e29b57"

- datetime "2022-10-23T05:25:26Z"

blocksize

- x 15000

- y 5

proj:bbox[] 4 items

- 0 5100000

- 1 2100000

- 2 5400000

- 3 2400000

- proj:wkt2 "PROJCS["Azimuthal_Equidistant",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0],UNIT["degree",0.0174532925199433],AUTHORITY["EPSG","4326"]],PROJECTION["Azimuthal_Equidistant"],PARAMETER["latitude_of_center",53],PARAMETER["longitude_of_center",24],PARAMETER["false_easting",5837287.81977],PARAMETER["false_northing",2121415.69617],UNIT["metre",1,AUTHORITY["EPSG","9001"]]]"

proj:shape[] 2 items

- 0 15000

- 1 15000

- Equi7_TileID "EU020M_E051N021T3"

- constellation "sentinel-1"

proj:geometry

- type "Polygon"

coordinates[] 1 items

0[] 5 items

0[] 2 items

- 0 5100000.0

- 1 2100000.0

1[] 2 items

- 0 5100000.0

- 1 2400000.0

2[] 2 items

- 0 5400000.0

- 1 2400000.0

3[] 2 items

- 0 5400000.0

- 1 2100000.0

4[] 2 items

- 0 5100000.0

- 1 2100000.0

proj:transform[] 6 items

- 0 20

- 1 0

- 2 5100000

- 3 0

- 4 -20

- 5 2400000

- sat:orbit_state "descending"

- sar:product_type "GRD"

- slice_gap_filled False

sar:polarizations[] 2 items

- 0 "VV"

- 1 "VH"

- sar:frequency_band "C"

- sat:relative_orbit 168

- sar:instrument_mode "IW"

- border_noise_removed True

- sar:center_frequency 5.405

- sar:resolution_range 40

- thermal_noise_removed True

- sar:resolution_azimuth 40

- sar:pixel_spacing_range 20

- sar:observation_direction "right"

- sar:pixel_spacing_azimuth 20

- sat:platform_international_designator "2014-016A"

links[] 4 items

0

- rel "collection"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

1

- rel "parent"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M"

- type "application/json"

2

- rel "root"

- href "https://stac.eodc.eu/api/v1"

- type "application/json"

- title "EODC Data Catalogue"

3

- rel "self"

- href "https://stac.eodc.eu/api/v1/collections/SENTINEL1_SIG0_20M/items/SIG0_20221023T052526_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH"

- type "application/geo+json"

assets

VH

- href "https://data.eodc.eu/collections/SENTINEL1_SIG0_20M/V1M1R1/EQUI7_EU020M/E051N021T3/SIG0_20221023T052526__VH_D168_E051N021T3_EU020M_V1M1R1_S1AIWGRDH_TUWIEN.tif"

- type "image/tiff; application=geotiff"

- title "VH Polarization"

- description "The Sigma Nought backscatter in VH polarization"

eo:bands[] 1 items

0

- name "VH"

- description "Sigma Nought in VH polarization"

alternate

local